How can you protect your Internet connected VoIP system?

It Doesn't Take Much to Stop a VoIP Phone System Connected to the Internet in its Tracks... How Can you Protect It?

The short answer is that you can't always protect a VoIP phone system that's connected to the Internet.

If you have a VoIP system that uses a real channelized voice T1(s) or PRI(s), and it doesn't need access to the Internet for incoming or outgoing calls, it can't be brought down by hackers / bad guys through the Internet. The local phones in the office will work fine as long as the local network is working.

If you have remote workers using VoIP system phones connecting via the Internet, you'll never have a 100% hacker-proof system. A VoIP system that's not connected to the Internet and that has its own network with separate wiring and switches within the office, is about as robust as any TDM system. The main difference is how the voice is digitized - in a continuous steam (TDM) or packetized (VoIP).

Not connecting a VoIP phone system to the Internet isn't very practical for most companies. Sometimes you need to disable remote VoIP phones to get the phones in the office back up and figure out what's going on.

Best practice for VoIP is to have a separate network with separate wiring and switches, and a separate pipe(s) to the Internet. Preferably a data T1 or Synchronous DSL (SDSL) to the Internet that has the same up and down speeds. That turns troubleshooting problems into a manageable process, whether something's broken or hackers are attacking the system.

I recently saw a VoIP system brought down that was sharing a LAN with PCs. The PCs seemed to be working - maybe a little slow. But the VoIP stations were very close to dead. There were four 24 port switches in the rack with most of the ports flashing simultaneously like crazy. I wasn't familiar with that particular network, but I was pretty sure the lights weren't supposed to be doing that.

The only way to troubleshoot that is to hook up my Ethershark Tap and fire up Wireshark to see what's going on, or do it the quick and simple way which is to disconnect each patch cord one at a time, from the switch. After about halfway through I pulled a plug and everything stopped flashing. Plugged it back in and the flashing started. Removed it and the constant flashing stopped.

Traced the patch cord to the other end and looked at the floor plan to see where that cable was. Found a Brother Multi-Function fax, scanner printer on that cable. Reset the machine and reconnected the patch cord to the switch. Both the PC and VoIP problems were solved.

If they had segregated networks for VoIP and PCs the phones would have never gone down - but the computers wouldn't have worked well until the fax machine was reset.

With a VoIP system that has SIP trunks, a VoIP server in a data center, or hosted VoIP, troubleshooting is a lot harder because it's no longer confined to one building - the problem could be in a zillion places.

I recently worked on another VoIP system with the server in a data center maybe 100 miles away. A lot of phones with four extensions (four registrations) each, but most had only one or two lines lit up indicating the line was registered. Sometimes the lines that were lit up would change on the phone. Extensions were registering and unregistering seemingly by themselves (the phones were setup to register every 180 seconds).

I did a tracert to google and it looked fine. Did some tracerts to the VoIP server IP in the data center and it didn't look all that bad. Some hops occasionally were way over 100ms, but things weren't consistent. What's going on?

I was able to login to the VoIP server just fine. Logs looked really strange, with lots of registrations and dropped registrations. Too much information for me to figure out what was going on easily, so I went for the easiest solution - and rebooted the server.

Same problems when it came back up a few minutes later. I kept poking around to see if I could get a clue where to start but didn't change anything. Then everything came back up and was working fine. I must be a genius!

Put in a trouble ticket to the data center to see if they had an idea. They sure did. They had a DDoS (Distributed Denial of Service) attack on someone else in the data center, and their pipes were pretty filled with garbage. The tech said they were able to mitigate the DDoS attack. I asked how, but they wouldn't give me any other information.

An hour later I sent the tech a message asking how I would know there's a DDoS going on in the data center that wasn't targeting the machine I was working on? He replied that I couldn't know. I could submit a ticket and they'd tell me. In the back of my mind, I'm wondering what kind of damage I can do trying to "fix" a perfectly good system that's being attacked by a DDoS? Kind of scary.

If the customer had hosted VoIP, it would have been much harder to figure out. Most hosted VoIP providers won't give you the time of day no matter what's going on. They don't want to look bad to prospective customers, and they know that everything ends up on the Internet eventually.

If the customer had a VoIP system on their premise the symptoms would have been different. The DDoS probably wouldn't have prevented on-premise stations or real phone lines from registering, but any SIP trunks wouldn't be working. That's a pretty good argument for having both real phone lines (POTS or T1) and SIP trunks if they're needed.

Why would someone DDoS a VoIP system? Who knows? Competition, wrong IP from the attacker, or more likely the attacker is demanding a ransom to stop the attack. Whatever the reason bringing down something on the web, including VoIP, is cheap and easy. There are guys in other countries who control botnets (groups of PCs with malware that lets the attacker tell all of them to go to a particular URL or IP address) that will do a DDoS for you by the hour or day. Pay by the number of simultaneous attacking computers you want, for however many hours you need.

Although the guys controlling the botnets are likely in some other country, some of the PCs they have control over could be in the US. The owner of the PC probably doesn't know they have botnet malware on the PC, as it's often undetectable by anti-virus software. The owner does get a clue about the malware when the botnet owner tells that PC to start an attack because the PC is likely to get pretty slow while the attack is going on.

There are anti-DDoS services that websites can pre-purchase that will mitigate future DDoS attacks to the point that customers will be able to buy stuff or otherwise interact with the site. That might cost $1,000 a month for the DDoS protection. These are fairly effective depending on how robust the attacker's botnet is, but these methods don't work well for VoIP, Video Conferencing or real-time gaming - because these applications need the packets to arrive at the other end in the correct order with very little delay (latency). That's probably not going to happen during a DDoS attack whether you are using some kind of DDoS mitigating service or not.

If you call one of these DDoS mitigation services during an attack in the hopes of stopping it, the costs will be much higher. Probably many thousands of dollars. For a big ecommerce site, it's worth either paying the ransom asked by the attacker - or paying the DDoS mitigation service. If you have a real-time web application like VoIP, video conferencing or gaming, there won't be much that will stop a determined hacker. These real-time web applications are very fragile compared to just about anything else on the web.

If your VoIP server is being brought down because you're in the same data center that has another customer being DDoS attacked, but they're not targeting your server, your best bet is to have a hot backup server in another data center. That's neither hard nor painfully expensive. A DDoS attack might not trigger an automatic switchover to the backup server, but you could switch manually.

Another option is to always have a current backup of your server, fire up a server in another data center, and have the data center restore your backup to that server.

When you are programming the registrations on VoIP phones to a hosted system or an off-site server, it's a good idea not to use the static IP address of the remote system. If you have to change servers and you have the IP address of your system programmed into the phones, it could be a nightmare bringing them all back up (lots of hours on a system with a lot of phones). Use a new domain name or add the server to an existing domain name (like voip.google.com). Doing the DNS (Domain Name Server) lookup does take a little bit of time, but having the flexibility to simply change the DNS entry for the domain to a different IP address can save a lot of time.

Some IP phones allow you to enter a backup registration from another server, which could be handy.

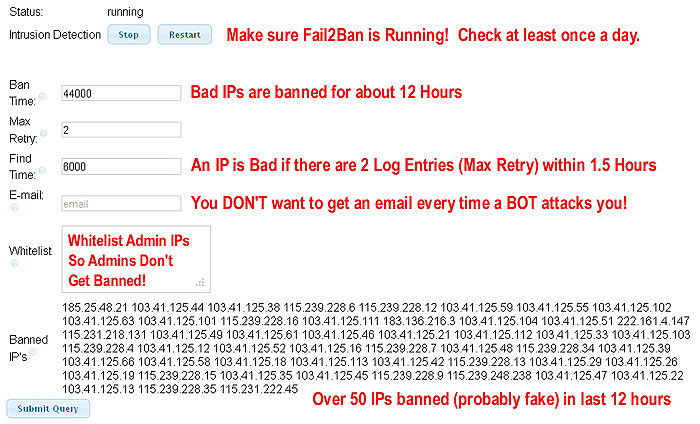

Even without a DDoS attack on your VoIP server there may be a whole LOT of bots trying to register phones on your server. Maybe enough to slow your server down to the point where VoIP calls don't sound good. Our VoIP server is on a Linux machine and uses a program called Fail2Ban to detect hacker's IP addresses, then block the IP in the firewall for a set duration:

If the bots behind each of those 50 banned IP addresses were trying to register a station on our system multiple times a minute (trying extension numbers / passwords), our VoIP system would probably be unusable. You definitely need something to block the bots trying to guess your passwords on any VoIP system connected to the Internet.

What will the hackers do when they guess a password correctly? Use your trunks to make some money until you detect it. That might cost a lot of money in minutes to foreign countries.

Products

Products